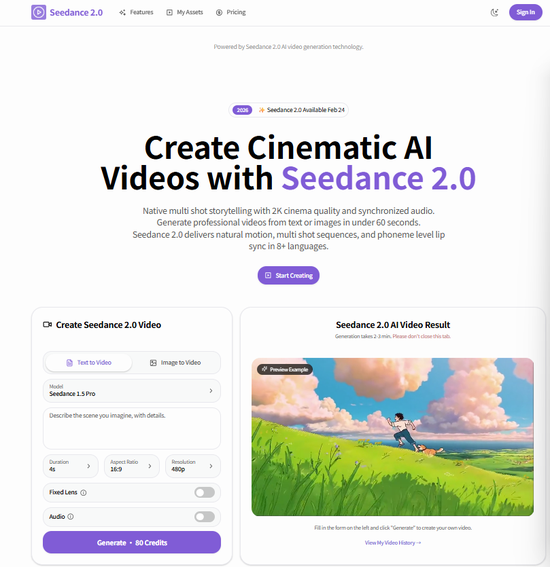

(ECNS) -- Chinese tech giant ByteDance's latest AI video model, Seedance 2.0, has taken the internet by storm following limited internal testing started on Saturday.

The model has attracted attention for its ability to generate highly lifelike videos with fluid camera movement and strong visual consistency. At the same time, its rapid rise has reignited debates over the contentious copyright issues surrounding AI training data.

According to official specifications, Seedance 2.0 can produce cinematic‑quality videos from simple text prompts or images. By using a new "dual-branch diffusion transformer" model architecture, it can process pixels and audio at the exact same time.

This marks a significant departure from earlier AI video tools, which often produced silent, GIF-like clips. With Seedance 2.0, audio is native rather than added later: a racing car scene includes the roar of the engine, while spoken dialogue comes with built-in lip-sync. The sound is not a post-production effect but is generated alongside the video itself.

A research report released by Kaiyuan Securities Co.,Ltd. highlighted several key breakthroughs, including autonomous and partitioned camera motion, multi-shot consistency, and autonomous and partitioned camera motion.

Users can input a single prompt or image, after which the system plans a sequence of shots. It remembers what the character looks like and the lighting of the room and keeps the visual style consistent across the entire narrative.

The launch arrives amid a fiercely competitive global landscape for video generation AI, intensified by OpenAI's release of Sora last year. Chinese media outlet Bjnews noted that Seedance 2.0 "appears poised to reshape the competitive landscape."

Feng Ji, producer of "Black Myth: Wukong," posted on Sina Weibo on Monday, praising Seedance 2.0 as "the strongest video generation model on Earth at present, bar none."

While expressing pride in its Chinese origins, Feng also issued a stark warning about the risks that come with AI misinformation. "Hyper-realistic fake videos will become extremely easy to produce, and existing intellectual property and content review systems will face unprecedented challenges," he stated, urging the public to educate family and friends about verifying video sources.

Copyright concerns intensified after tech blogger Tim (Pan Tianhong) disclosed that the model was able to generate audio closely resembling his voice using only a single photo, alongside video footage resembling his company's office building.

"This essentially confirms that Seedance 2.0 has been extensively trained on our company's video content," he remarked.

On Monday evening, another Chinese tech blogger, Lan Xi, revealed on Weibo that in an official ByteDance-operated creator WeChat group, a ByteDance staff member posted a message stating that Seedance 2.0 has garnered far more attention than expected during its internal testing phase.

"To ensure a healthy and sustainable creative environment, we are urgently optimizing the model based on current feedback. Right now, it does not support using real-person materials for its primary references. We deeply understand that the boundary of creativity is respect," the staff member wrote.

"The more powerful AI becomes, the more vigilant we must be against its misuse," the blogger said.

Amid the fervor, AI-related stocks in China rallied on Monday, reflecting heightened market optimism around domestic advancements in generative AI technology.

(By Zhang Dongfang)

京公网安备 11010202009201号

京公网安备 11010202009201号