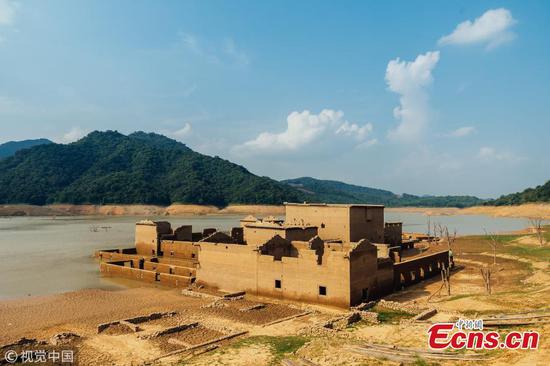

The Huawei Mate 10 model with on-device AI capabilities recognizes food at a display area in Beijing over the weekend. (Photo: Li Xuanmin/GT)

Rising technology steers away from cloud, but experts say it is 'in infancy'

One year ago, when artificial intelligence (AI) robotic AlphaGo rose to prominence after it unexpectedly defeated legendary player Lee Se-dol, tech giants and venture capitals were rushing to pump money into the rapidly rising sector, most of which implements cloud-only computation.

But now, a new trend is driving the development of embedded AI, a technology that can process data and run AI algorithms on devices without transferring data to cloud servers.

A cellphone equipped with embedded AI can recognize food and provide real-time details on calories, helping dieters choose healthy cuisines. Embedded AI also enables consumers to use microwave ovens without setting a time, as the appliance itself instantly judges a food product's necessary cooking time. Also, with embedded AI, people are free to install cameras at home to check the safety of elders and children without worrying about data being leaked.

Those are just a few scenarios where embedded AI can be widely applied in daily life, as pointed out by industry insiders during a forum on embedded AI held over the weekend in Beijing.

Technology application

"So far, most of the development in the AI sector focuses on cloud AI, or computation that is connected to the cloud, but there are a stream of scenarios where on-device computations edge [over cloud computation],"Geng Zengqiang, chief technology officer of China-based operating systems (OS) provider Thundersoft Software Technology Co, also the event organizer, told the Global Times in an exclusive interview.

Sun Li, vice president of Thundersoft, told the Global Times that in some circumstances of cloud AI application, the way data is transferred via the Internet and then processed in a cloud server inflicts a series of issues.

For example, the operation of jet airliner Boeing 787 generates 5 gigabytes of data every second - almost larger than the maximum capacity of any commercial wire network, making it "a mission impossible to complete AI computation in the cloud," said Sun.

Another application scenario is automatic driving, which produces almost 1 gigabyte of data every second and requires real-time algorithms and intelligent decision-making.

"Connecting to the cloud and transmitting data back to the vehicle would cost a great amount of time, pushing up driving risks," Geng said.

Besides those limitations, as an increasing number of domestic users raise concerns over the privacy of intelligent home appliances, on-device AI, however, which can function without linking to the Internet, guarantees their personal privacy, Sun noted.

Against this backdrop, "the year of 2017 is promising for embedded AI technology to take off - and that has become an industry consensus," Geng said, pointing to a huge market potential.

He predicted that the prospect of AI application would not be dominated by either cloud or on-device computation, but instead, a combination of both.

In the future, "on-device AI should be able to detect and process raw data and run algorithms beforehand, and after filtering, more valuable data will be transferred to the cloud, forming a big database," Geng said.

Commenting on the trend, Sun Gang, global vice president of US-based tech giant Qualcomm Technologies, also proposed a model that utilizes deep learning through the cloud, with the device executing intelligent decisions.

He also said at the forum that the smartphone, with an expected global shipment of 8.5 billion units in the next five years, is likely to be the first type of mobile device to widely employ embedded AI technology.

China is a pioneer, or at least not a latecomer compared with foreign rivals, in the world of on-device AI smartphone application, despite gaps in the on-device AI underlying platform - chips and OS - which is currently led by US companies Qualcomm and Google, Geng said.

Domestic telecom heavyweight Huawei in October unveiled its new Mate 10 model, powered by the Kirin 970 processor with on-device AI capabilities.

The handset, with sensors and cameras, can provide real-time image recognition, language translation and heed voice commands.

Yu Chengdong, CEO of Huawei's terminal service department, said that the Mate 10, with on-device AI capability, is 20 times faster in image recognition than foreign smartphone vendors, news website ifeng.com reported in October.

For example, "it takes only 5 seconds for the Mate 10 to recognize 100 photos, but for the iPhone 8 Plus and Samsung Note 8, such time skyrockets to 9 seconds and about 100 seconds, respectively," Yu was quoted as saying in the report.

Geng also highlighted China's abundant AI talents, most of whom have studied and been trained overseas, in bridging the gap between foreign counterparts.

Barriers ahead

At the forum, industry insiders also took note of a bunch of technological barriers, stressing that the development of embedded AI is still in its "infant period" with a limited scope of applications.

This is because "the efficiency of the power, thermal and size of mobile devices constrain the operation efficiency of embedded AI," Sun from Qualcomm said.

So far, even the chips of the highest performance are unable to accommodate the amassing on-device AI workloads, Geng added.

Geng's comment is echoed by Chen Yunji, co-founder of Beijing-based AI chip start-up Cambricon Technologies Corp.

"Back in the 1990s, a similar problem of insufficient operation capacity also appeared in the graphic processing sector… it was not until the invention of a specialized graphic processing unit chip that the problem was addressed at that time," Chen said at the forum, urging chipmakers to step up efforts to develop a capable one with stronger and deeper learning abilities.

But research and development of such chips cost a lot, and that has led to another headache.

"Are consumers willing to pay for a more expensive mobile device with on-device AI capacity? Tech companies still need to balance the costs and revenues," Geng said.

Another way to deal with the issue is to revise on-device algorithms, which could then decrease the requirement for chip efficiency, Tang Wenbin, chief technology officer of Face++, a Beijing-based tech start-up that specializes in facial recognition, said at the forum.

Tang noted that Face++ is now studying for a revision model of ShuffleNet, with the aim of speeding up the procedures of data computation tenfold from the current level.